The emergence of networking – Introduction to Serverless on AWS

The emergence of networking

Early mainframes were independent and could not communicate with one another. The idea of an Intergalactic Computer Network or Galactic Network to interconnect remote computers and share data was introduced by computer scientist J.C.R. Lick‐ lider, fondly known as Lick, in the early 1960s. The Advanced Research Projects Agency (ARPA) of the United States Department of Defense pioneered the work, which was realized in the Advanced Research Projects Agency Network (ARPANET). This was one of the early network developments that used the TCP/IP protocol, one of the main building blocks of the internet. This progress in networking was a huge step forward.

The beginning of virtualization

The 1970s saw another core technology of the modern cloud taking shape. In 1972, the release of the Virtual Machine Operating System by IBM allowed it to host multiple operating environments within a single mainframe. Building on the early time-sharing and networking concepts, virtualization filled in the other main piece of the cloud puzzle. The speed of technology iterations of the 1990s brought those ideas to realization and took us closer to the modern cloud. Virtual private networks (VPNs) and virtual machines (VMs) soon became commodities.

The term cloud computing originated in the mid to late 1990s. Some attribute it to computer giant Compaq Corporation, which mentioned it in an internal report in 1996. Others credit Professor Ramnath Chellappa and his lecture at INFORMS 1997 on an “emerging paradigm for computing.” Regardless, with the speed at which technology was evolving, the computer industry was already on a trajectory for massive innovation and growth.

The first glimpse of Amazon Web Services

As virtualization technology matured, many organizations built capabilities to auto‐ matically or programmatically provision VMs for their employees and to run busi‐ ness applications for their customers. An ecommerce company that made good use of these capabilities to support its operations was Amazon.com.

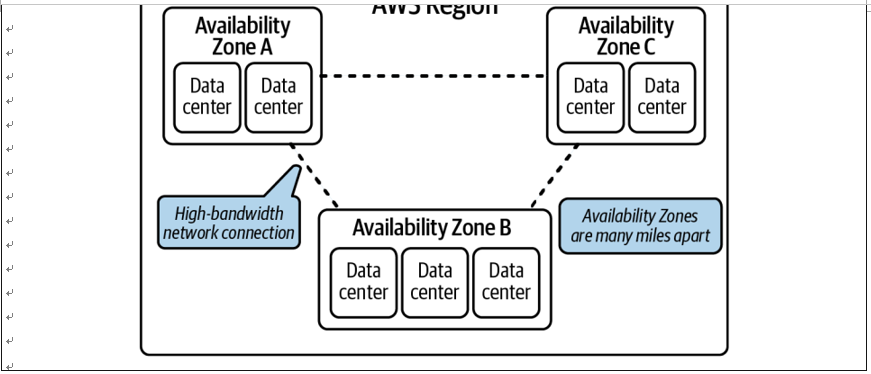

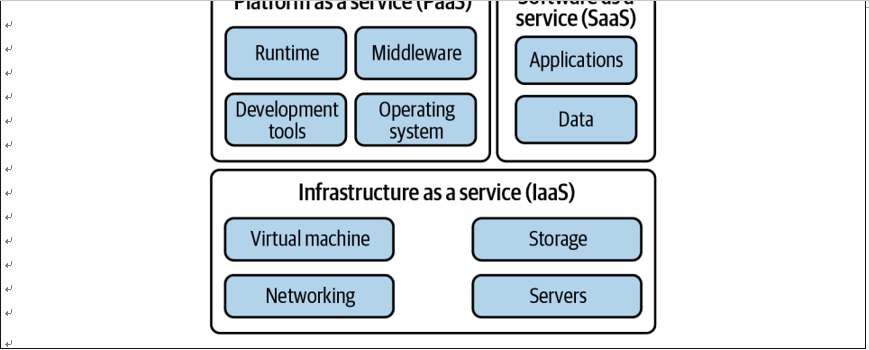

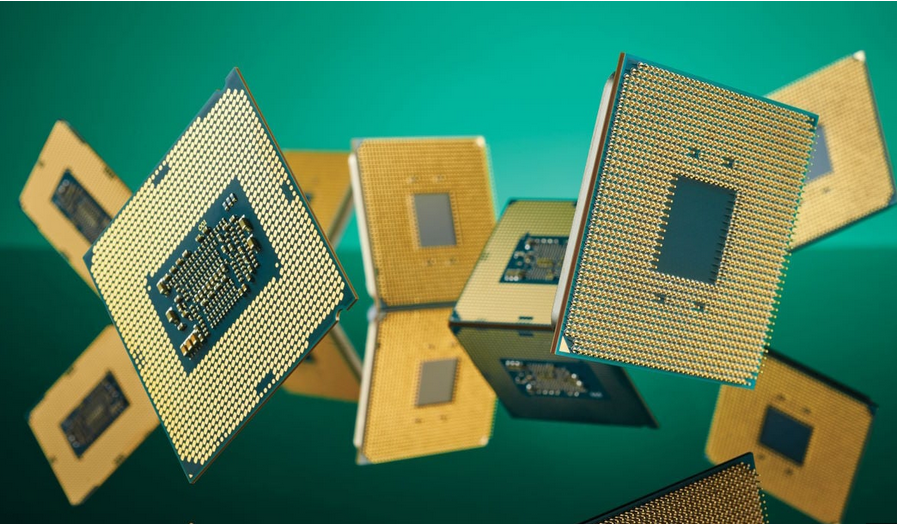

During early 2000, engineers at Amazon were exploring how their infrastructure could efficiently scale up to meet the increasing customer demand. As part of that process, they decoupled common infrastructure from applications and abstracted it as a service so that multiple teams could use it. This was the start of the concept known today as infrastructure as a service (IaaS). In the summer of 2006, the com‐ pany launched Amazon Elastic Compute Cloud (EC2) to offer virtual machines as a service in the cloud for everyone. That marked the humble beginning of today’s mammoth Amazon Web Services, popularly known as AWS!